Read More

Discover what’s next for AI in healthcare in 2026 - Get Access to the Full Report

ON THIS PAGE

Quick Summary: ChatGPT Health and Claude for Healthcare signal a shift toward purpose-built AI in healthcare. In this blog, we talk about what these platforms offer and how they impact stakeholders. We also discuss the next steps for healthcare organizations as AI adoption moves into the next phase.

The healthcare industry is moving beyond early AI experimentation toward more purpose-built solutions. Many organizations have already tested AI for tasks like billing or patient communication. By 2030, the AI in healthcare market will be worth $188 billion. But recent platform launches suggest a shift toward deeper, healthcare-specific capabilities.

In January 2026, OpenAI and Anthropic introduced ChatGPT Health and Claude for Healthcare. These are AI platforms designed specifically for medical contexts, data sensitivity, and workflows. Their arrival signals a new stage in how AI may be applied to patient care and clinical operations.

For healthcare organizations, this raises important questions:

- What do these tools actually offer?

- Who are they designed for?

- What does their emergence mean for future AI adoption?

Read on as we answer all these questions and more.

What Are ChatGPT Health and Claude for Healthcare?

ChatGPT Health and Claude for Healthcare represent a new class of AI platforms. These are designed specifically for healthcare use cases. They are conversational and assistive systems. And they are expected to operate within medical contexts, including healthcare terminology, patient information, regulatory considerations, and clinical or operational workflows.

This differs from earlier approaches where general-purpose AI tools were later adapted for healthcare. These platforms are being introduced with healthcare-specific scenarios, privacy considerations, and compliance requirements in mind.

At a high level, both platforms are designed to:

- Recognize healthcare language and clinical context

- Support interaction with health-related data under defined safeguards

- Assist patients and healthcare professionals beyond basic chat or rule-based automation

Below is a brief overview of what each platform is designed to offer and how they differ.

ChatGPT Health

ChatGPT Health is OpenAI’s health-focused experience within the ChatGPT platform. Introduced on January 7, 2026, it lets users connect personal medical records and wellness data (with appropriate permissions). With that, they can receive clearer, more contextual explanations of lab results, medical terms, health trends, and visit preparation.

The experience is provided within a separate health workspace. It only offers support for understanding and planning and does not replace professional diagnosis/treatment.

Claude for Healthcare

Claude for Healthcare is Anthropic’s healthcare-oriented AI offering. Launched publicly on January 11, 2026, it extends Claude’s capabilities to both individuals and enterprises. It supports interactions with personal health information and selected industry data sources.

The platform is also designed to assist with operational and administrative workflows. For example, prior authorization review, medical coding, and compliance-related tasks.

Here’s how the two platforms differ:

| Aspect | ChatGPT Health | Claude for Healthcare |

|---|---|---|

| Primary Emphasis | Consumer‑focused health insights | Individual + enterprise workflows |

| Enterprise Tools | Limited enterprise integration | Built for clinical/operational tasks |

| Industry Databases | Personal records only | Includes medical/insurance databases |

| Regulatory Positioning | Consumer privacy controls | HIPAA‑ready infrastructure |

| Typical Users | Patients and individuals | Patients, clinicians, payers, organizations |

What Do These AI Platforms Mean for the Healthcare Industry?

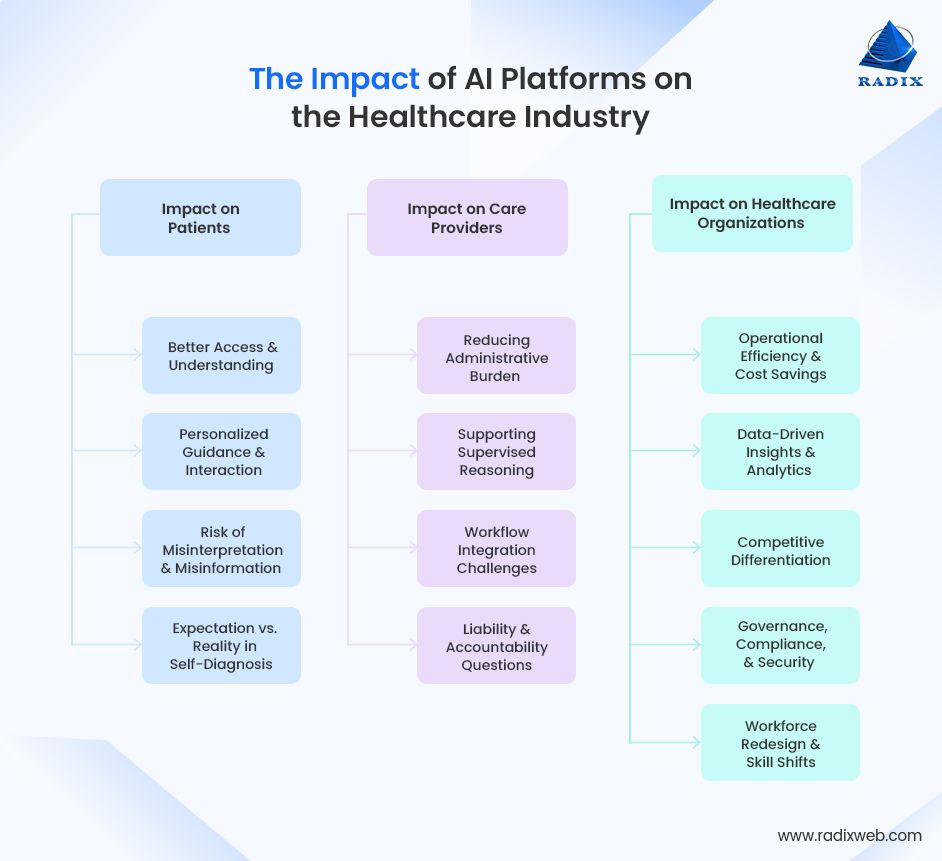

The introduction of ChatGPT Health and Claude for Healthcare reflects a strong healthcare industry trend. We are now moving from generic to more practical, healthcare-aligned AI applications. This shift presents both opportunities and challenges across different stakeholders.

1. Impact on Patients — Empowerment Comes with Caution

AI platforms are opening new avenues for patients to engage with their healthcare. But this empowerment must be grounded in trust and safety.

a. Better Access and Understanding

AI can translate complex medical language into plain, patient‑friendly explanations. This helps users understand lab results, diagnoses, and treatment options. All without hours of searching or confusion. This increases health literacy and enables patients to participate more meaningfully in care decisions.

b. Personalized Guidance and Interaction

When connected to personal health data (medical records, fitness apps), these platforms can provide contextual insights rather than generic responses. That means health recommendations can be tailored. It improves engagement with care plans and follow‑ups.

c. Risk of Misinterpretation and Misinformation

AI isn’t a medical professional. Models sometimes offer confident but incorrect outputs (“hallucinations”). Patients who rely on AI alone (without professional consultation!) risk misunderstandings or delays in care.

d. Expectation vs. Reality in Self‑Diagnosis

While platforms like ChatGPT Health aim to supplement healthcare, they don’t replace professional evaluation. Misuse can lead to harm if patients treat AI findings as definitive diagnosis.

2. Impact on Care Providers — Efficiency Gains and New Responsibilities

For clinicians and care teams, these AI platforms offer significant workflow support. But also require careful adoption strategies:

a. Reducing Administrative Burden

Large language models can automate or assist with:

- Documentation

- Summarization of patient histories

- Extracting relevant details from EHRs

This can free clinicians from time‑consuming paperwork. That, in turn, gives them more time for face‑to‑face care time with patients.

b. Supporting Clinical Reasoning (Under Supervision)

AI can quickly surface relevant evidence, flag trends, or summarize large data sets. It offers decision support cues rather than clinical orders. However, clinicians must verify and contextualize outputs because AI doesn’t replace medical training.

c. Workflow Integration Challenges

Integrating AI into clinical workflows isn’t plug‑and‑play. Several healthcare tech challenges are a part of the process. Data quality issues (say, messy EHR fields) and interoperability challenges can lead to inaccurate or misleading outputs. That's unless organizations invest in proper integration and governance.

d. Liability and Accountability Questions

When AI contributes to clinical decisions, who bears responsibility? There are emerging concerns about the ownership of AI‑influenced decisions and how clinicians document AI‑derived insights. Clear policies and governance frameworks are essential.

3. Impact on Healthcare Organizations — Strategic Transformation (Not Just Automation)

For hospitals, health systems, payers, and healthcare enterprises, these tools offer opportunities far beyond isolated use cases, if adopted thoughtfully:

a. Operational Efficiency and Cost Savings

AI can accelerate processes like scheduling, documentation drafting, claims processing, and prior authorizations. This leads to measurable reductions in operational overhead and faster throughput across departments.

b. Data‑Driven Insights and Analytics

A lot of insights hide in patient feedback and operational logs. Automating the extraction and interpretation of such unstructured data unlocks these insights. This lets organizations make better strategic decisions based on clinical data.

c. Competitive Differentiation

Early adopters who successfully integrate AI into virtual healthcare setups, patient engagement, care delivery, and administrative workflows can differentiate on experience, efficiency, and outcomes. This increasingly influences patient choice and provider reputation.

d. Governance, Compliance, and Security Imperatives

AI adoption isn’t just technical; it has an organization-wide impact. Leaders must build governance frameworks that enforce responsible use, monitor outcomes, and ensure data privacy and security. Healthcare is highly regulated. Any AI integration must meet or exceed standards like HIPAA and equivalent protections.

e. Workforce Redesign and Skill Shifts

While AI can reduce repetitive tasks, it also prompts role changes. It lets resources move from routine transcription to oversight and quality control of AI outputs. Investment in training and change management, however, is crucial.

What Should Healthcare Organizations Do Next?

The global AI market is expected to grow at a 31.8% CAGR between 2021 and 2030. And as healthcare AI capabilities continue to evolve, organizations are increasingly evaluating how and where AI can be applied responsibly and effectively. Progress depends not only on technology, but on readiness, prioritization, and governance.

The following steps outline practical considerations for healthcare organizations moving from exploration toward implementation.

Step 1: Evaluate AI-Readiness

Before investing in AI solutions, organizations should assess their current state.

AI readiness extends beyond tools and models. It includes data quality, governance structures, infrastructure, and regulatory alignment. Key questions to consider include:

- Are clinical records accessible, consistent, and suitable for AI use cases?

- Can existing IT systems, like EHR or EMR, support AI integration without disrupting workflows?

- Are regulatory requirements such as HIPAA, GDPR, and regional policies clearly understood?

Step 2: Explore High-Impact Use Cases

AI delivers the most value when applied selectively. Rather than broad deployment, organizations benefit from targeting use cases with clear operational or clinical relevance. Common starting points include:

- Patient engagement using AI-assisted communication, reminders, and support tools

- Clinical documentation including drafting, summarization, and record organization

- Workflow optimization for administrative processes such as billing, scheduling, and prior authorizations

- Predictive analytics for dentifying risk patterns and care gaps earlier

Step 3: Engage with Expert AI Partners

AI software development spans across technical, clinical, and regulatory domains. Successful adoption typically requires:

- Clear governance and accountability structures

- Expertise in healthcare data, AI programming languages, compliance, and system integration

- Ongoing monitoring of performance, accuracy, and risk

Expert support from AI developers is essential for ensuring that.

Get Started Before It Is Too LateAI is transforming healthcare. Platforms like ChatGPT Health and Claude for Healthcare highlight how the industry is moving toward more specialized, context-aware tools. For healthcare organizations, the focus is shifting too. Instead of wondering whether to explore AI, the question is now how it can be applied responsibly and securely.At Radixweb, we partner with healthcare organizations to plan, implement, and scale AI initiatives confidently, safely, and effectively. Schedule a no-cost AI consultation to see how we can help you elevate patient experiences and secure your place at the forefront of healthcare innovation.

Frequently Asked Questions

Are ChatGPT Health and Claude for Healthcare safe to use for patients?

Can AI tools replace doctors or healthcare staff?

How can AI handle sensitive patient data securely?

What kinds of AI applications are most effective in healthcare today?

How much does it cost to develop AI solutions for healthcare?

Ready to brush up on something new? We've got more to read right this way.