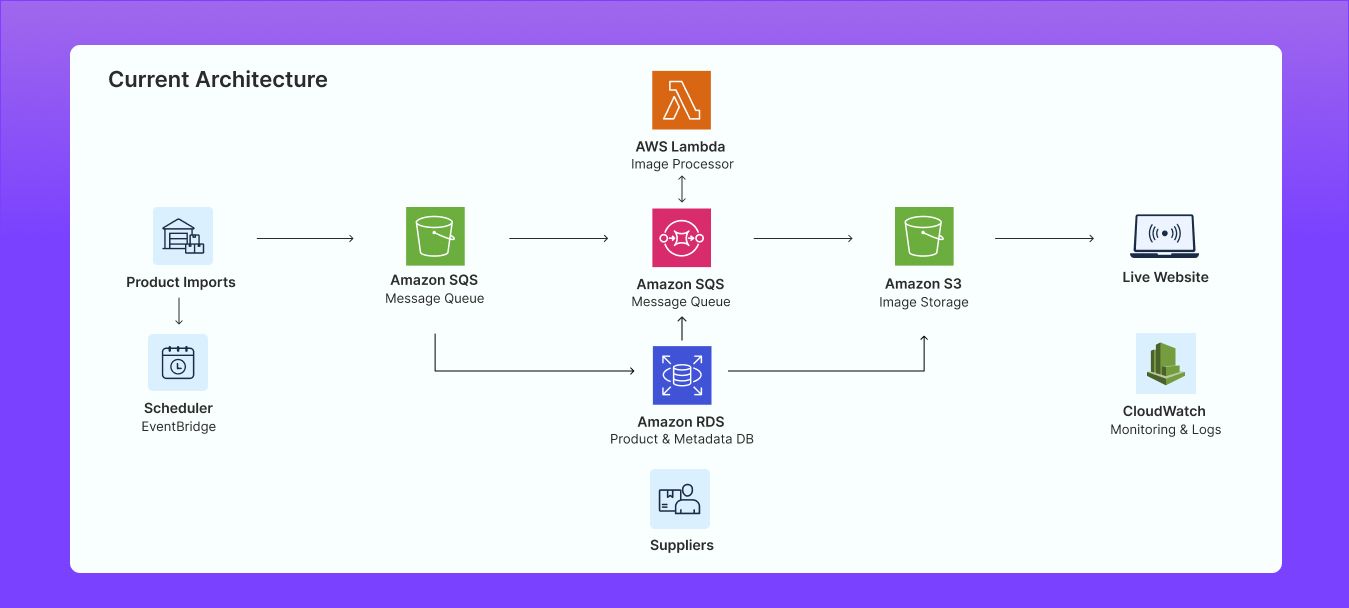

Lambda handled end-to-end image-related operations, including downloading files from the supplier’s CDN, converting them to WebP, and uploading them to S3.

Discover what’s next for AI in healthcare in 2026 - Get Access to the Full Report

About the Client

Our client is a U.S.-based wholesale dropshipping platform that connects online retailers with a large network of verified American suppliers. It supports over 3,000 domestic suppliers and a catalog of more than 500,000 products. They offer fast shipping through major channels like Shopify, Amazon, eBay, and Walmart.

Country

USA

Industry

Digital Commerce

Time Invested

3 Weeks

Team Size

6-10 Experts

Business Challenges

The client team handled supplier imports through spreadsheets that included product details and up to 10 images per item. Each image had to be pulled from the supplier’s CDN, converted to WebP, stored in S3, and logged in the database. Import batches often ranged from 2,000 to more than 8,000 products, triggered by a cron job running four times a day. Once volumes crossed 4,000-5,000 products, the server struggled and had 10–20 minutes of downtime and 2-3 days of delays in image processing. In some cases, the images didn’t finish processing even after several days.

Project Overview

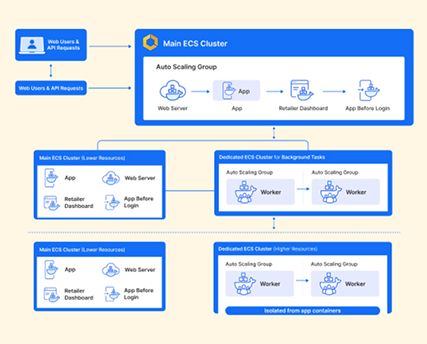

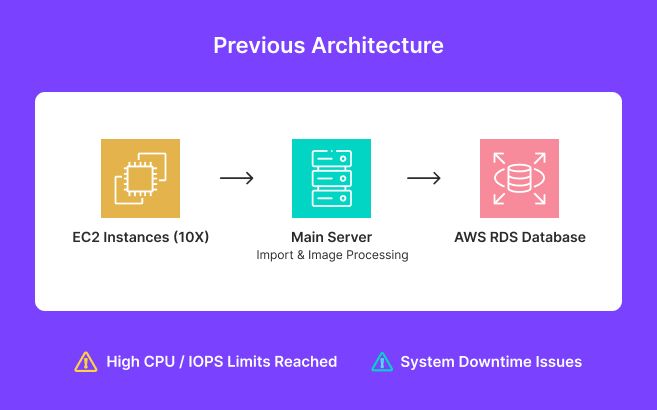

Our assessment showed that routine product imports were disrupting platform stability because too many tasks were placed on the main server. 10 EC2 instances were used only for downloading images, while the core application/main server handled all the read/write operations (reading the data from Excel and storing it in the db), image conversion, and resizing.

This was putting additional load on the AWS RDS database server. Most of the time, its CPU and IOPS operations limit reached maximum capacity, which caused downtime, and left image processing stuck for days.

We proposed the use of serverless Lambda service. We shifted all the image downloading and processing code from the server to Lambda function and deleted all 10 nano EC2 instances. We set this process to run every 2 hours to check if there are any images to download and process. Our software development team also implemented SQS (simple queue service), which passes the images to process one by one to lambda in the background. We increased the RDS IOPS limit to 5000 to support frequent, high-volume import cycles.

Client Feedback

Our system uptime has remained above 99.9%, and platform response times have dramatically improved. Their ability to understand complex requirements, pivot when priorities shift, and deliver quality work on time has made them a critical and trusted extension of our internal team.

The entire workflow needed a deeper review. The client was already dealing with very frequent downtimes and couldn’t absorb more business impact. We invested a lot of time to dig into the problem and then rebuilt the process in a way that gives them a setup to handle growth and a process they won’t have to revisit every few months.

Nipam Chokshi

Project Challenges

- Handling large product batches where simultaneous image downloads, conversions, and database writes overloaded the main server and caused recurring downtime.

- Identifying capacity issues in EC2 instances, cron jobs, and application logic to understand why processing stopped or failed during high-volume import cycles.

- Designing a serverless architecture that balanced Lambda concurrency, SQS throughput, and S3 operations without creating new performance or cost issues.

- Stabilizing RDS performance by managing heavy read/write spikes during imports and determining the right IOPS allocation to prevent resource exhaustion.

Solutions Delivered

Serverless Pipeline for Image Processing

We modernized the image processing pipeline using a cloud-native, serverless architecture on AWS Lambda, powered by event-driven workflows that automatically scale to handle high-volume product imports with greater speed, resilience, and operational efficiency.

Replacing EC2 Nano Instances

We replaced the ten nano EC2 instances with a Lambda-based workflow to handle every image task independently. There’s no need to manage multiple servers. Each image job runs in an isolated environment without depending on fixed instance capacity.

Queue-Driven Workflow Implementation

We introduced SQS to feed image tasks to Lambda in a controlled order. Each image enters the queue and is picked up only when Lambda is ready to process it. It prevented concurrency spikes, reduced retry loops, and removed the timing issues that previously caused image jobs to stall or fail during heavy imports.

Automated Batch Checks

We implemented an automated, time-triggered image pipeline that runs on a two-hour cadence. Each cycle intelligently detects new image records, queues them via SQS, and asynchronously invokes Lambda to process them in the background. This ensured continuous, hands-free batch clearing, eliminating the need for manual developer intervention.

Separating Image Workloads from Core Site

By shifting all processing away from the main server, we isolated intensive operations from the spreadsheet import flow. The website was free from unnecessary load and improved overall stability during routine supplier onboarding activities.

Increasing Database Throughput Capacity

We increased the IOPS allocation to 5,000. It gave the system enough headroom to handle frequent read/write bursts. This stabilized the import workflow and prevented failures caused by resource exhaustion on the database layer.

Onboard a team customized to your workload. Our engineers are ready to join within 5-7 business days.

Core Technologies Used

AWS Lambda

Amazon SQS

SQS acted as the control layer for processing flow. All the image records were pushed into the queue, and Lambda pulled tasks at a steady pace to prevent sudden spikes during large imports.

Amazon S3

S3 served as the storage layer for all processed images. Once Lambda completed the conversion, the WebP file was written directly to the correct S3 path, and the generated URL was saved back into the product record.

Amazon RDS

RDS stored all product and image metadata required for imports. Import cycles generated heavy write operations, so the IOPS capacity was increased to handle the higher demand.

AWS EventBridge Scheduler

EventBridge triggered the pipeline automatically every two hours. Each trigger checked for new image records, populated the SQS queue, and initiated Lambda processing.

Business Benefits

Same-Day Image Processing

The new serverless workflow processed images within 4-5 hours of each product import, compared to the earlier 2-5 day delays. Our client can now keep up with daily supplier activity and maintain a consistent publishing cycle without backlog buildup.

Zero Downtime During Heavy Imports

Previously, large imports caused 10-20 minutes of downtime due to server overload. With processing moved to Lambda and SQS, the platform stayed fully available even during batches of 5,000-8,000+ products, laying groundwork for future BI enhancements.

Faster Publishing Turnaround

Suppliers no longer had to wait several days for their products to go live. Image processing now completes the same day. Onboarding speed has improved and suppliers can now update their catalogs more frequently without operational delays.

Scalability with 100k Images

We ran stress tests that processed around 100,000 images within 4-5 hours. This validated that the new architecture of the dropshipping platform could handle extreme workloads for potential future catalog expansion without degrading performance.

Our solution delivery framework covers architecture, build, QA, and deployment. TAT and delivery targets are set upfront.