Combined RAG with generative AI for legal document review to deliver accurate, contextually grounded answers to legal queries.

Discover what’s next for AI in healthcare in 2026 - Get Access to the Full Report

About the Client

Doyele, O’Keefe & Associates is a well-established legal services provider specializing in legal contract analysis and query resolution. Their work involves reviewing complex contracts, policies, clauses, and documents to provide accurate and timely insights.

Country

Ireland

Industry

Legal Services

Hours Invested

3000+

Team Size

3 Resources

Business Problem

Despite the growing adoption of AI in almost every industry, specialized AI software for law firms remains scarce. The core challenge for our client was the manual effort required to interpret and retain detailed documents. The process was time-intensive, mentally demanding, prone to oversight, and demanded high memory capacity.

Project Overview

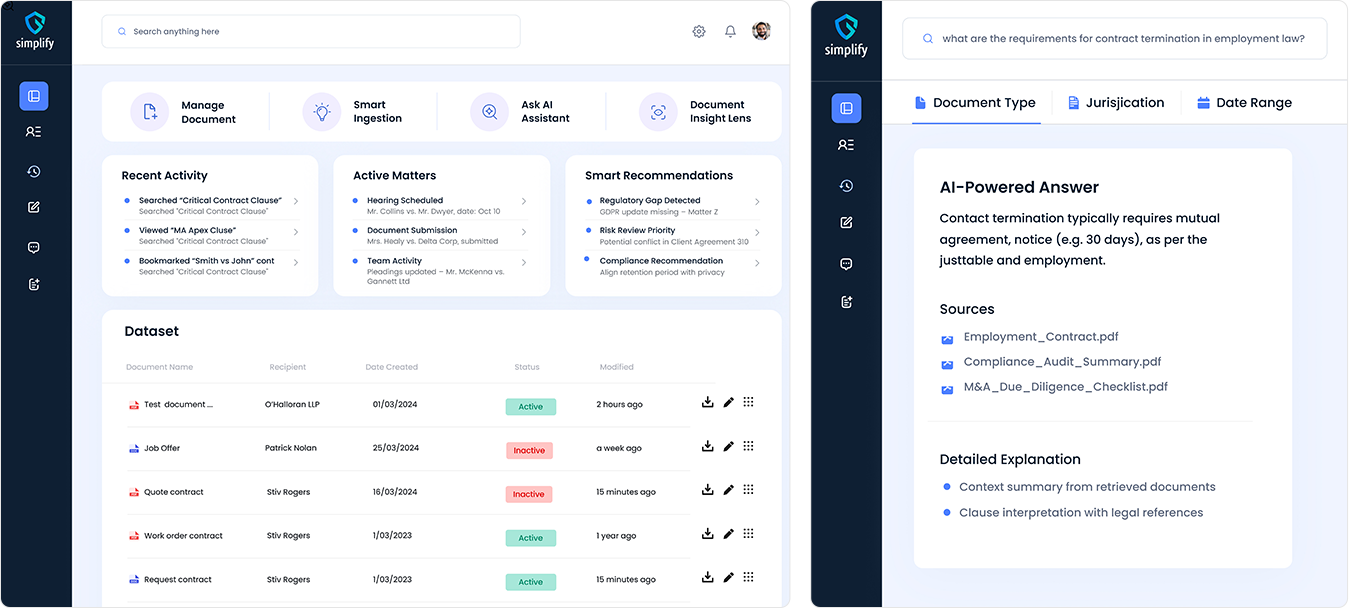

For the law firm dealing with the growing volume of documents, Radixweb was asked to design a legal software solutions to make document-heavy work faster, accurate, and less draining for their team. The task was to create an AI-powered legal document search system that could surface answers to legal questions without requiring exhaustive manual review.

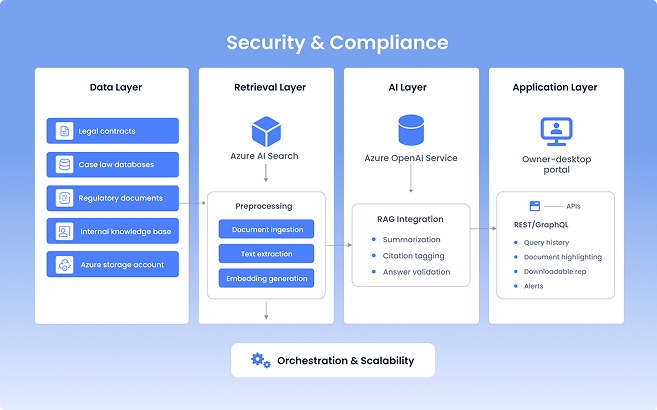

Based on these requirements, we recommended a Retrieval-Augmented Generation system built entirely on Azure Cloud. The AI legal software was designed using a serverless architecture. It can automatically adjust to workload fluctuations and handle queries at scale.

This structure provided a balance between technical feasibility and operational demands, as the system that could successfully reduce manual strain and apply AI for legal research to maintain the high accuracy required for law firms.

Direct Input from Our Client

Accuracy is everything in legal work. It used to take hours just to pull answers from documents. Radix understood the stakes. RAG is apparently all we needed and they implemented it beautifully. The system gives us the spot-on answers in seconds and it’s fast enough to keep up with our work as well.

Coordination with the client was key. We needed to understand not just their workflows but how their team approached research. The impact was immediate after implementing the RAG system. It was satisfying for the whole team to see it perform exactly as designed.

Faisaluddin Saiyed

Challenges Faced

- Ensuring Query Accuracy - Queries must have precise answers. RAG in legal tech requires foolproof embedding, retrieval, and context management to avoid incorrect or misleading results.

- Handling Large Document Volumes – To process thousands of legal documents, the system needed to retrieve information quickly without performance degradation or excessive memory use.

- Context Window Management - Splitting and managing context for RAG queries without losing crucial information was critical for accurate responses.

- Latency Minimization - Ensuring that query responses remained fast despite large datasets through caching, indexing, and API calls across services.

How the Solution Came Together

RAG-Based Architecture Design

The RAG model integrated in the legal document automation software retrieves verified content from client documents before generating responses for factual accuracy.

Hybrid Search Functionality

We combined keyword-based and vector-based search within Azure AI Search to balance semantic understanding with legal term matching. The retrieval layer dynamically scores search types and merges the results.

A Fully Serverless Design

The entire solution runs on Azure Functions and Azure Service Bus. Workflows for legal document retrieval using AI were modularized into independent, stateless functions.

Asynchronous Processing Pipeline

Azure Service Bus coordinates message exchange between services, which enables concurrent document processing for the system to handle high query volumes without latency issues.

Context-Aware Prompt Engineering

Prompts were dynamically structured using metadata like clause type and jurisdiction. The legal document automation software interprets questions with better contextual awareness.

Automated Data Ingestion Pipeline

Our AI developers built an automated upload and indexing pipeline for the client to continuously add new legal documents without manual preprocessing or downtime.

Work with 20+ AI/ML engineers having certified expertise in cloud and generative models.

Key Technologies Used

RAG Framework

Azure AI Search

To enable hybrid search and semantic vector retrieval with keyword matching to locate relevant information.

Azure Service Bus

For managing asynchronous messaging between components, coordinating document ingestion, embedding generation, and query processing.

Azure Functions

To host serverless, event-driven tasks and workflows for legal document processing, query routing, and embedding creation.

Azure Storage Account

Stored legal documents, embeddings, and metadata with encryption and role-based access for secure AI solutions for law firms.

Azure OpenAI Service

Generated embeddings and enabled semantic understanding. This formed the foundation of the RAG system for legal document search and Q&A.

Project Outcomes and Benefits

Development of the AI platform delivered immediate results for the client, including faster document review, 100% correct query answers, reduced overhead, and the capacity to process more workloads.

500+ Concurrent Queries Handled

The AI-powered legal research tool scales automatically. There are no latency spikes even during high workloads.

Operational Cost Reduction

Serverless execution and optimized Azure Storage usage reduced operational costs by around 40%, compared to running always-on virtual machines.

Automated Query Routing

Asynchronous pipelines process thousands of documents per hour. They automatically generate embeddings and routing queries.

97% Coverage of Complex Queries

Previously overlooked or buried clauses are now retrieved. The system generates near-complete answers for nuanced legal questions.

Explore More Case Studies

We assign specialized AI teams for enterprise projects, with flexible allocation of resources.